Just like in many other countries, here in the UK there is a concept of document certification, a legal mechanism that allows residents to prove their identity by supplying certified photocopies of their identification documents (usually a driving licence and a recent utility bill) instead of the originals. This comes particularly handy if someone needs to prove their identity to a distant entity without going there physically or sending the originals by post. In most scenarios, certified documents have the same legal power as the corresponding originals.

Certification service is usually provided by public entities or persons ‘of good standing’, such as solicitors, local councils, notaries, and also the post office. An authorised official compares the photocopy to the original document, making sure they look exactly the same, and certifies the authenticity of the copy with their signature and/or seal.

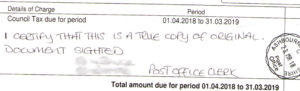

A copy of a council tax bill certified by the post office

A copy of a council tax bill certified by the post office

From the point of view of cryptography, document certification is a typical Trusted Third Party scheme. By certifying your documents, the official acts as an independent and verifiable third party with no conflict of interest with the originator and the recipient of the documents whatsoever.

Sadly, the scheme, as used in the UK and other European countries, is not entirely flawless. There are scenarios where a malicious individual or company can fraudulently use it to steal the document owner’s identity and use it for their own purposes. Imagine the following scenario:

1) Your new tax advisor asks you to prove your identity to her by sending certified copies of your ID and address to her (this is a genuine legal requirement imposed by Anti Money Laundering regulations).

2) She then remotely registers a limited company in Jersey. When asked for her ID by the company registrar (who follows the same AML ruling), she sends them the certified copies she’d received from you. The registrar has no reason not to trust certified documents, so they accept them.

3) Congratulations, now you are a rightful and fully accountable (with a stress on ‘fully accountable’) owner of that company in Jersey. Profit? Not for you, I’m afraid.

Cryptographers call this type of exploitation ‘a man in the middle.’ Being way more popular in electronic communication protocols, it can still be used to hack offline protocols, just like this one above. What you need for this attack to work, apart from the actors – a sender, a receiver, and, of course, that dark man in the middle who will be playing pass the parcel with both of you, – is an important factor called the absence of proof of origin.

The company registrar in Jersey has no means to verify that the documents they receive come from the real you. Really, what certification proves is that the photocopies are authentic. It doesn’t prove that you’ve made these copies willingly, knowingly, or with intent to set up an LTD. On the other hand, you have no means to ensure that the copies you sent to the tax advisor won’t go somewhere else. That’s how the concept of ‘proof of authenticity of a photocopy’ is mistakenly substituted for ‘proof of ID’.

So how to avoid getting your identity stolen by a disgraceful service?

Try to avoid employing photocopy certification at first place, if possible, even if such option is on the table. If there is a viable option to proof your identity in person, do it. A 30 mile journey is nothing comparing to dealing with the consequences of identity theft.

If you still have to do it remotely, perform due diligence on whoever is requesting the certification. Is it a reputable company? How long has it been around? Does it comply with the data protection act?

If possible, write a context-specific note on the certified copy. Let your note describe what this copy is intended for. For example,

Attn: Ms J Roberts, City Solicitors LLP, Re: Capital Gains Tax Affairs, in response to your letter Ref 30284-1 of 30/03/2018, 02/04/2018.

This will prevent the document from being re-used for a different purpose, as a writing of such kind will most certainly attract attention of an unintended recipient.

I believe that rules of document certification should change in a similar manner to actually provide for ‘proof of ID’ for the requester and ‘proof of use’ for the submitter, rather than a dubious ‘proof of authenticity of a photocopy’. What we need to do is extend the certifying writing with two pieces of information – ‘labels,’ – one from the requester, and another from the submitter.

The certifying official would then write both labels on the photocopy being certified, for example,

I certify these documents following a request from High Street Solicitors LLP Ref #311-235-1 (requester’s label), to be used in a civil case #213-1 ONLY (your label)

– thus unequivocally binding the certification to that specific context.

Having received a certified document created in such manner, the requester would know that it was created in response to their specific request. The document holder, on the other hand, could also be sure that the photocopy can only be used for the purpose they declared in their part of the certifying writing.